Upcoming changes to DataGrab 5

February 25, 2023

DataGrab is getting a much needed overhaul.

DataGrab was initially released back in 2010, and it has remained largely unchanged since then. You configure it to read a CSV, JSON, or XML file, relate properties from the file to fields in ExpressionEngine, and it imports to create new entries or update existing entries. It was originally written to continually read from that file, over, and over, and over again. If your file had 5000 entries in it and it was attempting to import entry #4874 it meant that part of DataGrab's code had to execute a loop 4874 times to get to the row or item in the file to import an entry, and it executed the loop 4873 times before that, and 4872 times before that. That is a lot of memory consumption and overhead and it simply doesn't scale well.

Had I originally written DataGrab in 2010 I probably would have done it the same way because I didn't know any better. But now I know better. DataGrab 5 is switching to a producer/consumer model using Laravel's Queue. When DataGrab 5 reads from your CSV, JSON, or XML file it will push (or produce) items into the queue. If you are running an import from withing the ExpressionEngine control panel it will immediately start consuming the entries to import them with workers.

Upcoming changes:

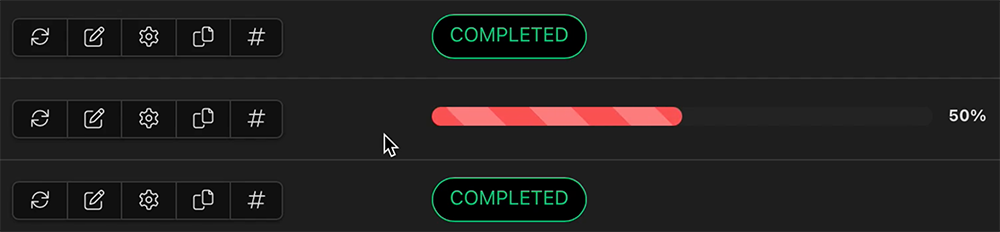

When importing within the control panel and you have configured your import to delete non-imported entries, you will see a second red progress bar. The first purple progress bar is the consumer that is importing the entries, and the second progress bar is the consumer that deletes the other entries. This used to be included in the same request, but hey, since we're using queues lets take advantage of them and split up the work.

If you have an import that is incomplete, or in a waiting state, as soon as you open the DataGrab module page in the control panel it will resume importing. You won't have to manually re-initiate the import.

The "limit" parameter is used to determine how many entries a single worker will consume before shutting down. If you set the limit to 0 it will attempt to consume as many entries as it can until it runs up against your PHP's max_execution_time or memory limits. When those limits are reached, it will immediately start another worker and continue importing entries. Think of the workers as a way to batch the imports.

If you only run imports from within the ExpressionEngine control panel, not much will change for you. If you had imports configured with a "limit" value below 50, upon upgrading to DataGrab 5 it will change the limit to 50. This is because the queue does a much better job at managing it's own resources and we don't have to set a "limit" of 1 to stay within the any PHP or server based timeout settings.

If you run imports from the CLI (command line) then some of these changes might be more important to you. If you use the import:run command without any additional arguments other than the --id, then it will automatically produce and consume all the entries from your import file. However, if you set a "limit" in your config it will terminate the import when it reaches the set limit. If you want to keep using the command this way it is best to set your limit to 0 in the imports config settings, or simply us the --limit=0 argument, which will let the consumer run until it self terminates.

php system/ee/eecli.php import:run --id=27

The previous command can propose problems if you're importing hundreds or thousands of entries, which is why you are now able to separate the two processes by defining a --producer or --consumer argument. If you're importing large amounts of data, using the separate --producer and --consumer argument is highly recommended. If a customer submits a ticket about having trouble importing 10,000 entries and are not using these CLI commands, then my first recommendation will be to use the CLI and these commands before any additional support is provided. 😉

php system/ee/eecli.php import:run --id=27 --producer

Running this command will only read entries from your import file and put them into the queue. If you have a daily import you can setup a crontab to schedule this command.

php system/ee/eecli.php import:run --id=27 --consumer

Running this command will create a single worker to consume entries from the queue. If you set a "limit" of 50, then it will only import 50 entries then terminate. You'll need to run the --consumer command again. The best way to do this is again to setup a crontab on a schedule to run the command every 1, 3, 5, or 30 minutes (or use supervisord). Choose any interval that works for you. If the queue is empty and there is nothing to consume, then it'll just terminate itself, and try again at the next interval.

I hope these changes bring more stability to DataGrab, especially for those importing large amounts of data. At the very least I can say the code is a lot cleaner and DataGrab isn't doing as much code gymnastics as it used to iterate through import files.

Version 5 will initially be released, hopefully in the next few weeks, with the default Database queue, and later versions will hopefully bring support to Amazon's SQS, Redis, and Beanstallkd queue drivers.